Are you a journalist? Please sign up here for our press releases

Subscribe to our monthly newsletter:

Vision and touch employ a common strategy: To make use of both these senses, we must actively scan the environment. When we look at an object or scene, our eyes continuously survey the world by means of tiny movements; when exploring an object by touch, we move the tips of our fingers across its surface. Keeping this shared feature in mind, Weizmann Institute of Science researchers have designed a system that converts visual information into tactile signals, making it possible to "see" distant objects by touch.

Converting information obtained with one sense into signals perceived by another – an approach known as sensory substitution – is a powerful means of studying human perception, and it holds promise for improving the lives of people with sensory disabilities, particularly the blind. But even though sensory substitution methods have been around for more than fifty years, none have been adopted by the blind community for everyday use.

The Weizmann researchers assumed that the main obstacle has been the fact that most methods are incompatible with our natural perception strategies. In particular, these methods leave out the component referred to as active sensing. Thus, most vision-to-touch systems make finger movement unnecessary by converting the visual stimuli to vibratory skin stimulations.

Dr. Amos Arieli and Prof. Ehud Ahissar of the Neurobiology Department, together with intern Dr. Yael Zilbershtain-Kra, set themselves the goal to develop a vision-to-touch system that would more closely mimic the natural sense of touch. The idea was to enable the user to perceive information by actively exploring the environment, without the confusing intervention of artificial stimulation aids.

"Our system not just enables but, in fact, forces people to perform active sensing – that is, to move a hand in order to 'see' distant objects, much as they would to palpate a nearby object," Arieli says. "The sensation occurs in their moving hand, as in regular touch."

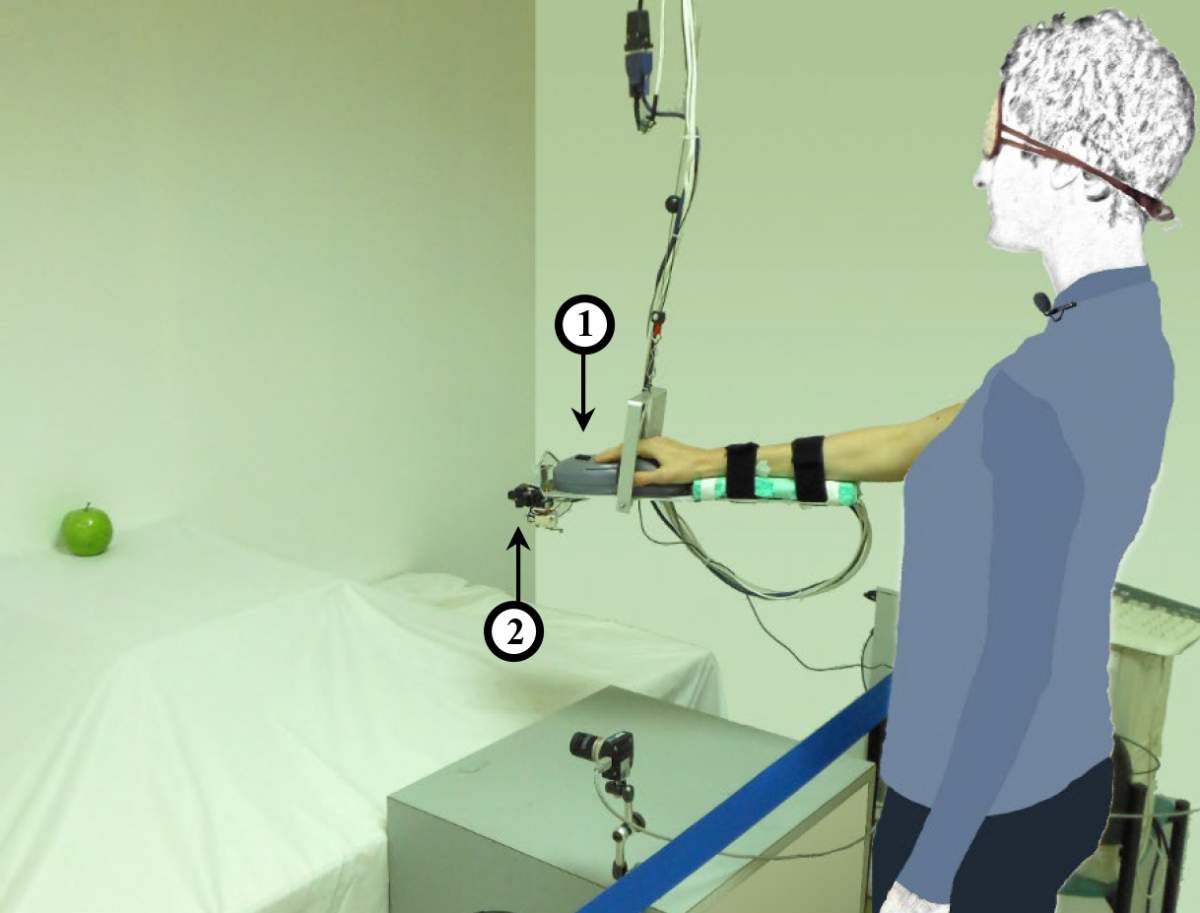

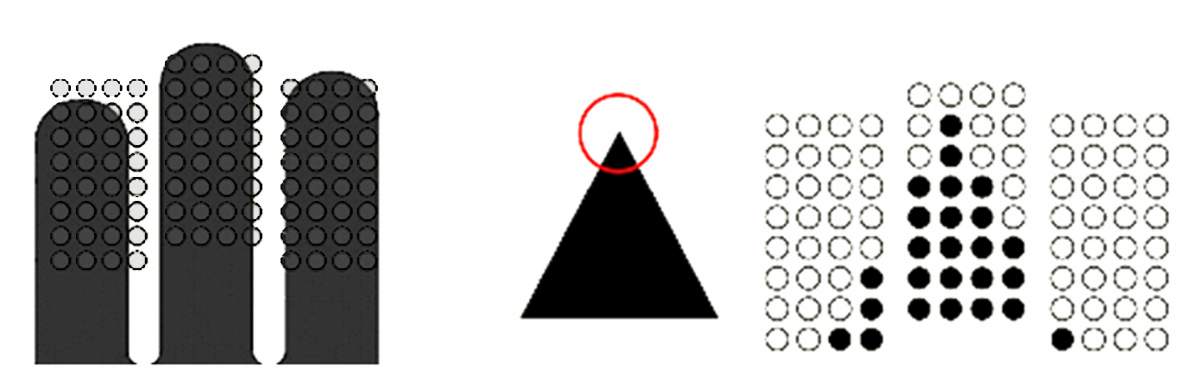

In the Weizmann system – called ASenSub – a small lightweight camera is attached to the user's hand, and the image it captures is converted into tactile signals via an array of 96 pins placed under the tips of three fingers of the same hand. After the camera's frame is mapped onto the pins, the height of each pin is determined by the brightness of the corresponding pixel in the frame. For example, if the camera scans a black triangle on a white surface, the pins corresponding to white pixels stay flat, while those mapped to black pixels are raised the moment the camera meets the triangle, producing a virtual feeling of palpating an embossed triangle.

Zilbershtain-Kra, with the help of ophthalmologist Dr. Shmuel Graffi, tested ASenSub in a series of experiments with sighted, blindfolded, participants and with people blind from birth. Both groups were at first asked to identify two-dimensional geometrical shapes, then three-dimensional objects, such as an apple, a toy rhinoceros and a pair of scissors.

Following training of less than two and a half hours, both groups learned to identify objects correctly within less than 20 seconds – an unprecedented level of performance compared with existing vision-to-touch methods, which generally require lengthy training and enable perception that remains frustratingly slow. No less significant was the fact that the high performance was preserved over a long period: Participants invited for another series of experiments nearly two years later were quick to identify new shapes and objects using ASenSub.

"Our approach has demonstrated the brain's amazing plasticity, which, in a way, enabled people to acquire a new 'sense'"

Yet another striking quality of ASenSub: It gave blind-from-birth participants a true "feel" for what it's like to see objects at a distance. Says Graffi: "As a clinician, it was fascinating for me that they could actually experience optical properties they'd previously only heard about, such as shadows or the reduced size of distant objects."

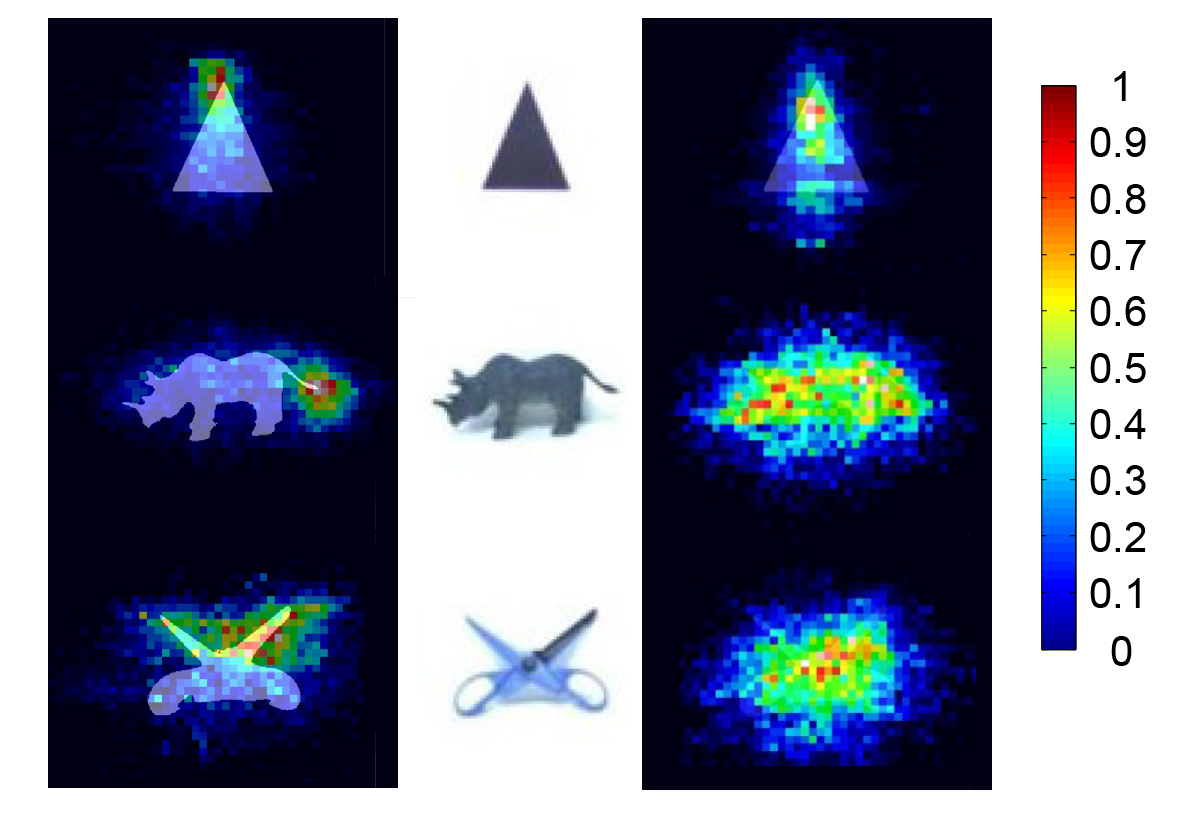

Sighted and blind participants performed equally well in the experiments, but analysis of results showed that their scanning strategies were different. Sighted people tended to focus to a great extent on the object's unique feature, for example, the tip of the triangle, the rhino's tale or scissor blades. In contrast, blind people encompassed each object along its entire contour, much as they commonly do to identify objects by unaided touch.

In other words, people relied on a strategy that's most familiar to them through experience, which suggests that it's learning and experience that mainly guide us in the use of our senses, rather than some inborn, genetically preprogrammed property of the brain. And this conclusion, in turn, suggests that in the future, it may be possible to teach people with sensory disabilities to make more optimal use of their senses.

"In broader terms, our study provides further support for the idea that natural sensing is primarily active," Ahissar says. "We let people be active and to do so in an intuitive way, using their automatic perceptual systems that work with closed loop interactions between the brain and the world. This is what likely led to dramatic improvement compared to other vision-to-touch methods." Zilbershtain-Kra adds: "Our approach has demonstrated the brain's amazing plasticity, which, in a way, enabled people to acquire a new 'sense.' After seeing how fast they acquired a new perception method via active sensing, I've started applying similar principles when teaching students – making sure that they stay active throughout the learning process."

The ASenSub system may be used for further fundamental studies of human perception, and it can be applied for daily use by the blind. For the latter purpose, it needs to be scaled down to a miniature device that can be worn as a glove or incorporated into a walking cane.

Compared to other existing methods, the perceptional accuracy and speed of identifying both 2- and 3-D objects in the system that converts visual information into tactile signals, based on “active sensing”, have improved on average by 300% and 600% respectively.

Prof. Ehud Ahissar is the incumbent of the Helen Diller Family Professorial Chair in Neurobiology