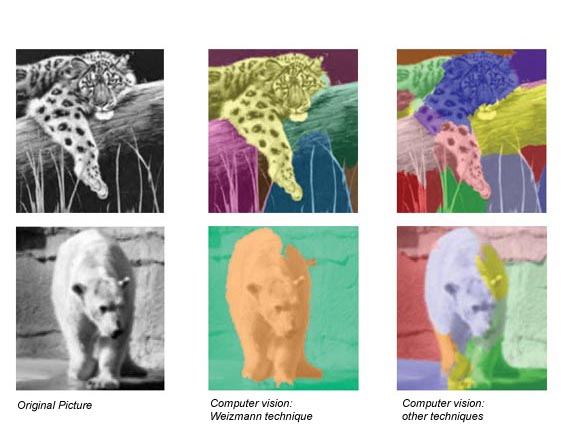

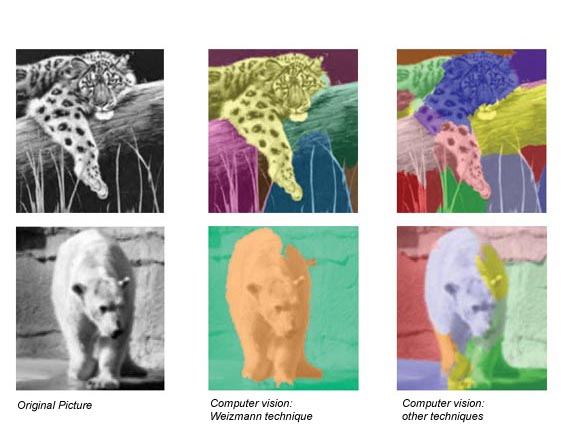

Prof. Ronen Basri%2C Dr. Meirav Galun and Prof. Achi Brandt. Righ- Computer vision Dr. Eitan Sharon. Colorful Ph.D. work.jpg)

Skeptic: Billions of nerve cells in the human brain are involved in the process of vision. Since scientists find it difficult to understand even one of those cells, it's inconceivable that computers will ever be able to see.

Scientist: All the more reason to start working on it.

If one could make a computer see, reproducing the natural visual process, one would be adding an important dimension to many aspects of life: Computers could lend an 'extra eye' in the operating room, alerting doctors to problems; surveillance systems would be greatly improved; and quality control in production lines could be significantly enhanced. Along the way, we might attain crucial insights into how the brain constructs images.

The problem is the way computers read images. They see them as grids whose tiny squares (pixels) each give the computer only one type of information: color. Color, though, can be an ambiguous guide when it comes to distinguishing between objects. A yellow butterfly on a yellow flower could be mistaken by the computer for part of the flower. A clown, displaying numerous colors, could be interpreted as many different objects. A zebra, having two alternating colors, could be split into the number of its stripes.

Recently, a team from the Weizmann Institute devised a method that significantly improves computers' ability to see. The new approach goes from the bottom up - beginning with one pixel. Each pixel in the image is compared to surrounding pixels in terms of color. As groups of pixels emerge, they are compared to one another using a wide range of more complex parameters: texture (the zebra's alternating black and white stripes emerge as a 'texture' characterizing one object), shape (groups of pixels, in contrast to individual pixels, will form a shape), average fluctuations in color, and more.

Groups having common parameters are joined. The bigger the groups, the more the parameters can be made refined and complex. The different objects in the image are separated according to the combination of parameters, which are so diverse that the error range is very small.

It doesn't sound as though any of this can be done in the blink of an eye, but in fact this method is much faster than all other existing methods. The reason: In the first stages, when individual or small groups of pixels are compared, only a few 'simple' parameters are employed to compare them. At later stages, many more complex parameters are used to compare large groups of pixels, but by then there are much fewer groups to compare. Thus the complex parameters are also not as time consuming as might be expected.

The technique was developed by then Ph.D. student Eitan Sharon under the supervision of Profs. Achi Brandt and Ronen Basri and in collaboration with Dr. Meirav Galun, all in the Applied Mathematics and Computer Science Department. They are currently working to improve the approach.

Of course, even if a computer is finally able to distinguish easily between objects, other obstacles will have to be overcome before it is able to 'see' as we do. For one thing, it will have to be taught to interpret what it sees and to correctly categorize objects (for instance, to understand that a poodle and a German shepherd both belong in the 'dog' category despite their different appearances). Thanks to the complexity of our brains, we perform these functions easily. Will scientists find a way to simplify these functions so that they can be incorporated into computers? It may take a while until we find out.

Prof. Brandt's research was supported by the Karl E. Gauss Minerva Center for Scientific Computation. He is the incumbent of the Elaine and Bram Goldsmith Professorial Chair of Applied Mathematics.

Prof. Basri conducts his research in the Moross Laboratory for Vision Research and Robotics.

Prof. Ronen Basri%2C Dr. Meirav Galun and Prof. Achi Brandt. Righ- Computer vision Dr. Eitan Sharon. Colorful Ph.D. work.jpg)