The goal: To create photovoltaic cells that are highly efficient, easy to produce and economical enough to put on every rooftop. If new materials that have recently come onto the solar cell scene live up to their promise, that goal could possibly be met within the next few years. Prof. David Cahen of the Materials and Interfaces Department says: “It will get to the point that the cells themselves will be so cheap that the external costs involved in producing photovoltaics and installing them will be what ultimately determine their price.”

Cahen and Prof. Gary Hodes of the same department are big fans of these materials, called perovskites. Based on such inexpensive metals as tin or lead, the compounds in this group of materials all share a particular crystal structure. “Perovskite cells are the first inexpensive type of solar cell to work in the high-energy part of the spectrum and give high voltage. And they are so easy to make, you can basically produce them on a hot plate,” says Hodes.

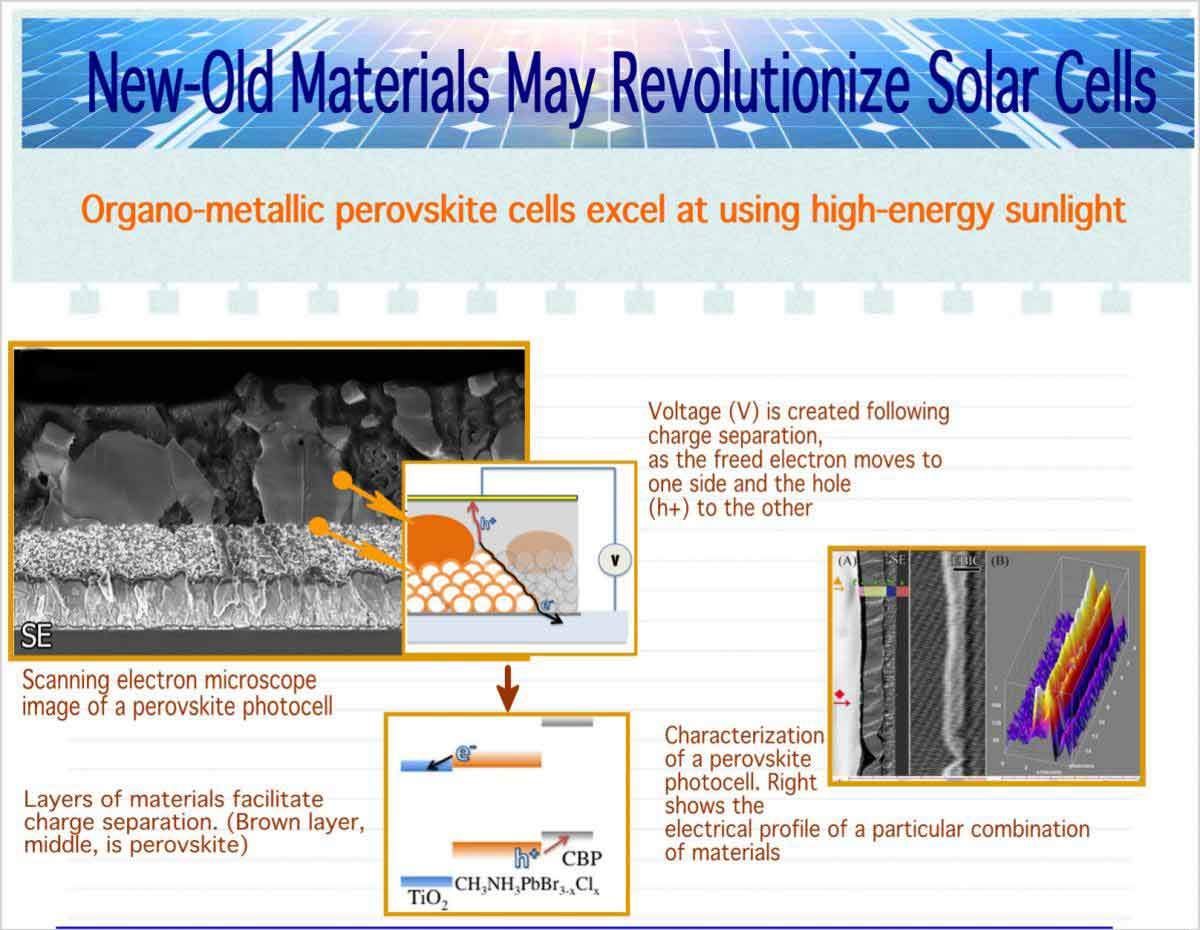

Ninety percent of today’s photovoltaic cells are made with silicon, explains Cahen. Silicon is an inexpensive, abundant material that can allow a current of electrons to flow when photons from sunlight hit its surface. To complete the process, other materials added to the solar cell facilitate charge separation: sending the electrons to one side of the cell for use and the “holes” they leave behind to the other.

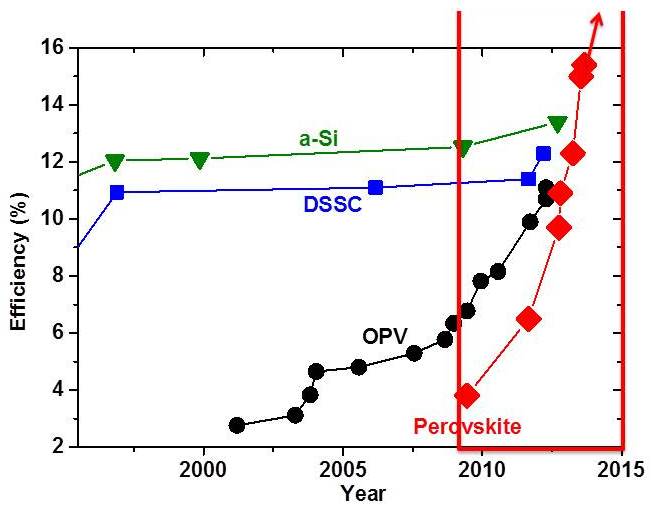

But even the very best future silicon module, working at its peak, can use only up to 25% of the sunlight that falls on its surface. Wavelengths at the lower end of the spectrum – for example infrared – do not have enough energy to free silicon’s electrons, while those at the higher end are so energetic that most of their energy is lost. Scientists trying to improve the efficiency of solar cells have been investigating materials that can make better use of those higher-energy photons, which could provide more electricity from the same surface area. Over the years many of these groups – Cahen and Hodes’s among them – have made progress, mostly incremental, in improving efficiency. But both the slow pace and the complexity of these improvements has often been a frustrating factor.

Perovskites leapt onto the solar cell scene in 2009 when a group led by Prof. Tsutomu Miyasaka from Yokohama, Japan, used them in a special type of solar cell. While the efficiencies they achieved were respectable, the stability of their cells was very poor. Within a short time, the groups of Profs. Nam-Gyu Park and Sang-Il Seok from Korea, and that of Prof. Henry Snaith (see below) in the UK and of Prof. Michael Graetzel in Switzerland showed the way to better efficiencies and stability. Snaith, who had recently set up his lab at Oxford University, and others showed that cells using these materials gave relatively high voltages. Within a short time, these groups were creating experimental perovskite solar cells that rivaled silicon alternatives in efficiency and produced higher voltage.

Cahen and Hodes immediately recognized the potential of this material to fill in the “missing link” – that is, a cheap cell that yields high voltages, efficiently using the high-energy part of the solar spectrum. In a number of recent papers published together with Dr. Saar Kirmayer and PhD student Eran Edri in Nature Communications, with Tel Aviv University colleagues in Nano Letters, and with colleagues from Princeton University in Energy & Environmental Science, they elucidated the mechanisms by which perovskites manage to convert sunlight into useful electricity with high-voltage efficiency, as well as demonstrating some methods of improving on solar cells made of these materials. In two studies that appeared in The Journal of Physical Chemistry Letters, they and MSc student Michael Kulbak tested perovskites layered with various materials that act as hole conductors for improved charge separation. These yielded high-voltage cells that surpassed 1.5V (silicon yields around 0.7V, by comparison). Their results, they say, can point the way toward further improvements.

What is the secret of these materials? Cahen and Hodes say their research and that of others points to the crystal structure. The materials form high-quality structures – that is, containing few irregularities. The behavior and efficiency of the perovskite cells fits in with a model that Cahen and his coworkers had proposed some years back, which says that this order is critical. Furthermore, the material simultaneously contains both non-organic (lead and iodide or bromide) and organic (containing carbon and hydrogen rings) components. It is the way these components fit together that makes perovskites so useful for photovoltaic cells: Like silicon, they form nicely ordered crystalline structures, but at the same time, the interactions between the organic parts are weak, making surfaces that allow electrons to cross easily from grain to grain.

At present, very small perovskite photocells have achieved 16% efficiency, and it appears likely that they can reach, and possibly surpass, 20%. There are still a few hurdles to overcome, cautions Cahen. For one, these materials must prove they are stable over time. For another, the compound contains a small amount of lead, and care must be taken either to find a substitute or to ensure that this toxic element cannot be released into the environment. Neither of these issues, say Cahen and Hodes, have dampened their enthusiasm. Perovskites have renewed both interest in the field and the hope that solar energy may finally become a widespread alternative to fossil-fuel-generated electricity.

Visit

Prof. Henry Snaith was as surprised as anyone by the rapid success of perovskite photovoltaics. “We looked at a whole range of materials before beginning to work with them,” he says. “Normally, if you start with 1% efficiency, you’re happy just to have proved that the material has photovoltaic properties. We started at 6%; within six months we had achieved 10%; and now we are already at 16% – nearly the efficiency of silicon.”

Snaith was recently in Israel as a guest of the Weizmann Institute, and through the Institute, he appeared at the annual meeting of the Israel Chemical Society. During his visit – just two days – he met with Cahen and Hodes and their groups. Snaith and the Weizmann scientists are at present undertaking a collaborative project that is supported by an initiative of Weizmann UK. They are working on widening the range of light energy the material can absorb. Snaith notes that he is looking forward to working with the Institute’s scientists: “Fantastic work is being done here at the Weizmann Institute,” he says.

Prof. David Cahen’s research is supported by the Mary and Tom Beck Canadian Center for Alternative Energy Research, which he heads; the Nancy and Stephen Grand Center for Sensors and Security; the Ben B. and Joyce E. Eisenberg Foundation Endowment Fund; the Monroe and Marjorie Burk Fund for Alternative Energy Studies; the Leona M. and Harry B. Helmsley Charitable Trust; the Carolito Stiftung; the Wolfson Family Charitable Trust; the Charles and David Wolfson Charitable Trust; and Martin Kushner Schnur, Mexico. Prof. Cahen is the incumbent of the Rowland and Sylvia Schaefer Professorial Chair in Energy Research.

Prof. Gary Hodes’s research is supported by the Nancy and Stephen Grand Center for Sensors and Security; and the Leona M. and Harry B. Helmsley Charitable Trust.

Working under Pressure

No man-made lubricant can even come near to meeting the daily demands we make of our joints. As we run or walk, the surfaces of the cartilage in our hip and knee joints slide over one another at high pressures. And they do so over and over, every day, for many decades. Two new studies in the group of Prof. Jacob Klein of the Weizmann Institute’s Materials and Interfaces Department, which appeared in Nature Communications, are helping to solve the puzzle of joint lubrication, and they may, in the future, point the way to treatments for problems with this lubrication, especially osteoarthritis. One in three people suffers from osteoarthritis – the most common form of arthritis – by age 65, and nearly one in two does so by age 85; while younger people often develop the disease after sporting or other accidents; and there is no cure.

Part of the reason that the joint lubrication system had not been revealed until now, says Klein, is that it forms a very thin layer on the cartilage surface, and its properties are not understood. Moreover, of the many different molecular substances in the joints, several have been proposed to be the main lubrication molecule. The three main molecules that have been suggested for the role of lubricant are phospholipids, hyaluronan (a chain-like, polymer molecule) and lubricin (a protein). The problem is that in some five decades of tests and experiments, none of them alone has performed nearly as well as the body’s actual lubrication system.

Klein and postdoctoral fellow Jasmin Seror suggested a way out of this bind: What if all three molecules worked together to lubricate the joints? They devised an experiment using two of the molecules. Working together with Linyi Zhu,a visiting student from the Chinese Academy of Science, Dr. Ronit Goldberg, a senior intern in Klein’s group, and Prof. Anthony Day of the University of Manchester, they created a model lubricating system of phospholipids and hyaluronan placed between atomically-smooth test surfaces made of the mineral mica. They then applied a wide range of loads to the surfaces, mimicking the pressures in actual joints, to see how well the two layers could slide when pressed.

Klein and his group had been studying the first type, phospholipids, because they are an unusual kind of lubricant that relies on water – via so-called hydration lubrication. Phospholipid molecules have two parts: a water-loving head and a couple of long, water-repelling tails. The heads have both a positive and a negative charge, and so they strongly attract water molecules, H2O, which also carry the two types of charge: negative on the oxygen and positive on the hydrogen. The water molecules form a hydration shell around the lipid head; it is this shell that works like a microscopic “ball bearing” and provides the lubrication.

Just the Right Resistance

In this model, the hydration shells on the phospholipid heads are what ultimately reduce the friction. How well do they work? One way to understand lubrication is to test the viscosity of the fluid substance – how resistant it is to flow. Anyone who has changed the motor oil in a car knows that the correct viscosity (the SAE numbers on the can) is important for keeping the motor running smoothly. The same is true of our joints. Not viscous enough, like plain water, and the substance would be squeezed out of the joint. Too viscous, like gelatin, and it would gum up the works. Hydration shells are a bit more complex, kept in place by the enclosed charges that attract the water molecules. But the question remained: Is their viscosity “just right,” so as to explain the lubrication?

To answer this, in the second study, Klein and his research group, including Liran Ma, Anastasia Gaisinskaya-Kipnis and Nir Kampf, investigated the viscosity of these watery shells. The researchers created simple hydration shells – small positive ions surrounded by water molecules. They then trapped these hydration shells between two atomically-smooth surfaces and measured their viscosity by compressing and sliding those surfaces at different velocities. Their results showed that a fluid made up of these shells is about 200 times as viscous as water – around that of motor oil SAE 30 or cold maple syrup. This viscosity, measured in trapped hydration shells, turns out to provide a good explanation for the observed reduction in friction in the previous study.

Taken together, says Klein, these experiments will lead to both a better understanding of the complex lubrication system that keeps our joints in condition and, hopefully, in the future, to treatments for osteoarthritis.

Prof. Jacob Klein's research is supported by the European Research Council; the Charles W. McCutchen Foundation; the Minerva Foundation; the Israel Science Foundation; and the ISF-NSFC joint research program. Prof. Klein is the incumbent of the Hermann Mark Professorial Chair of Polymer Physics.